What is Skaffold?

Skaffold is an open source project from Google handling the workflow for building, pushing and deploying your application to Kubernetes. It’s client-side only, with no on-cluster component and has a highly optimized tight inner loop, giving you instant feedback while developing.

INFO

Skaffold handles the workflow for building, pushing and deploying your application, allowing you to focus on what matters most: writing code.

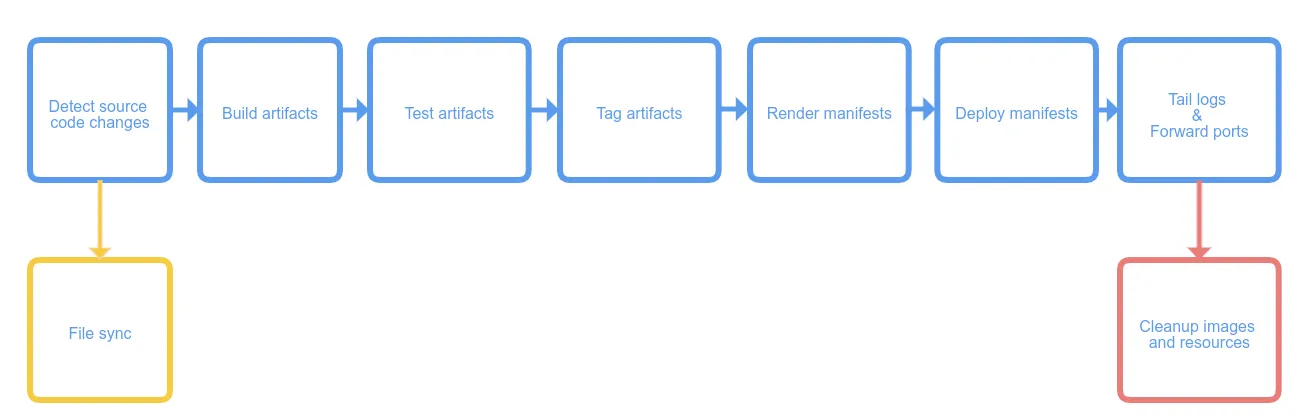

Let’s check out Skaffold’s pipeline stages.

Pipeline Stages

When you start Skaffold…

- it collects source code in your project,

- then builds artifacts with the tool of your choice,

- tags the successfully built artifacts as you see fit,

- pushes to the repository you specify and

- in the end helps you deploy the artifacts to your Kubernetes cluster, once again using the tools you prefer.

INFO

Skaffold allows you to skip stages. If, for example, you run Kubernetes locally with Minikube, Skaffold will not push artifacts to a remote repository.

Let’s get past the theory and build something usable. Remember my post about mocking APIs in Kubernetes? We’ll be using the Go server and Mockoon for the following Skaffold example.

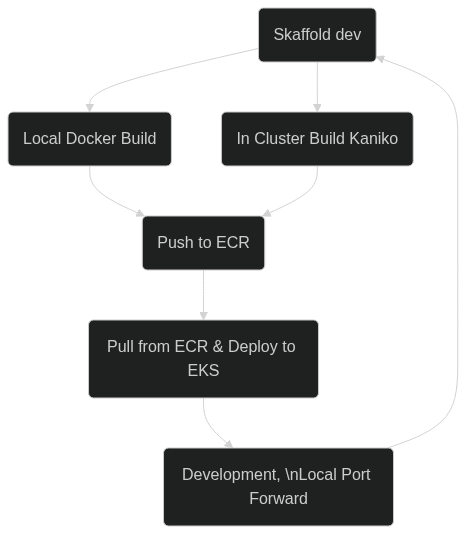

Interlude (1): kaniko

kaniko is a tool to build container images from a Dockerfile, inside a container or Kubernetes cluster.

kaniko doesn’t depend on a Docker daemon and executes each command within a Dockerfile completely in userspace. This enables building container images in environments that can’t easily or securely run a Docker daemon, such as a standard Kubernetes cluster.

kaniko is meant to be run as an image: gcr.io/kaniko-project/executor. It is not recommended running the kaniko executor binary in another image, as it might not work.

The kaniko executor image is responsible for building an image from a Dockerfile and pushing it to a registry. Within the executor image, we extract the filesystem of the base image (the FROM image in the Dockerfile). We then execute the commands in the Dockerfile, snapshotting the filesystem in userspace after each one. After each command, we append a layer of changed files to the base image (if there are any) and update image metadata.

We’ll be using kaniko in the following for in cluster building of those images, we want to use with Skaffold.

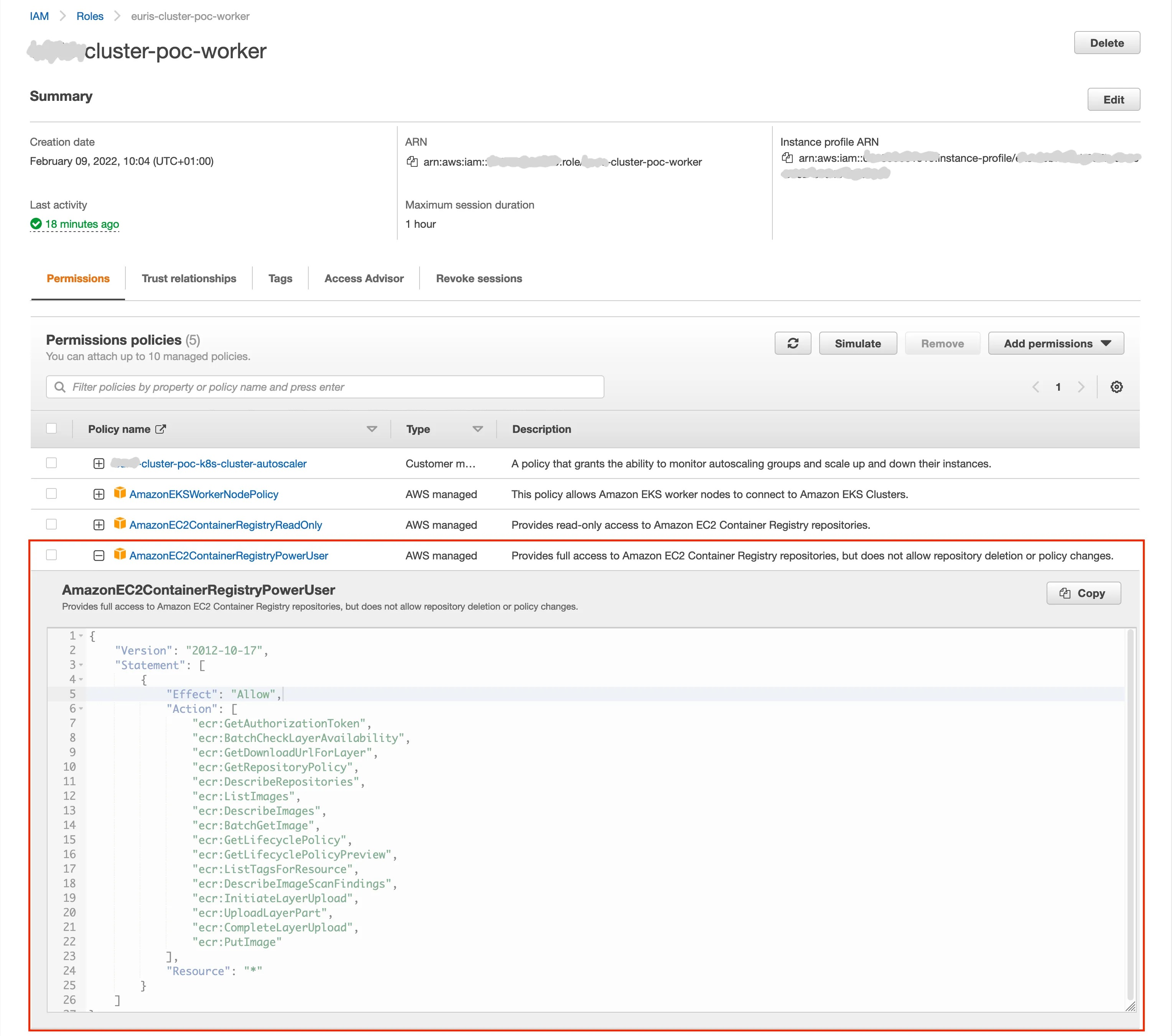

Interlude (2): Preparing AWS EKS & IAM

The Amazon ECR credential helper is built into the kaniko executor image. To configure credentials, you will need to do the following:

- Update the

credsStoresection of config.json:

{ "credsStore": "ecr-login" }- Configure credentials

- You can use instance roles when pushing to ECR from a EC2 instance or from EKS, by configuring the instance role permissions.

- Or you can create a Kubernetes secret for your

~/.aws/credentialsfile so that credentials can be accessed within the cluster. To create the secret, run:

kubectl create secret generic aws-secret --from-file=<path to .aws/credentials>You can mount in the new config as a configMap:

kubectl create configmap docker-config --from-file=config.jsonIn our example we’ll be just using { "credsStore": "ecr-login" } in a new config.json and create the secret via: kubectl create secret generic aws-secret --from-file=config.json, as the authentication to ECR will be regulated via an IAM role.

Setting up IAM

Assuming you have some kind of EKS Worker role for your EC2 EKS worker nodes, you have to attach a policy allowing access to ECR. In our example we’ll be using the AWS managed policy AmazonEC2ContainerRegistryPowerUser for testing purposes.

Skaffold Magic 🪄

To get started you need a skaffold.yaml in your project root directory.

apiVersion: skaffold/v2beta27

kind: Config

metadata:

name: http-mock

build:

tagPolicy:

sha256: {}

artifacts:

- image: yourrepo/go-mockoon

# if you want to build your images locally, use:

# docker:

# dockerfile: Dockerfile

kaniko:

dockerfile: Dockerfile

cluster:

pullSecretName: aws-secret

deploy:

kubectl:

manifests:

- go-mockoon-k8s.yamlLet

Don’t forget to add the --port-forward flag for local testing:

$ skaffold dev --port-forward

(...)

Starting deploy...

- deployment.apps/go-mockoon configured

Waiting for deployments to stabilize...

- skaffold:deployment/mockoon is ready. [1/2 deployment(s) still pending]

- skaffold:deployment/go-mockoon is ready.

Deployments stabilized in 2.487 seconds

Port forwarding service/mockoon in namespace skaffold, remote port 80 -> http://127.0.0.1:4503

Port forwarding service/go-mockoon in namespace skaffold, remote port 80 -> http://127.0.0.1:4504Flowchart of the Skaffold pipeline

Curl the mock endpoint to check if it works:

$ curl http://127.0.0.1:4504/

Hello World!Great, everything seems to be working fine!

From now on, if you change a line of code in your main.go server file, skaffold will detect the change and automagically rebuild your container with kaniko, push it to ECR and redeploy the go-mockoon pod.